Overview

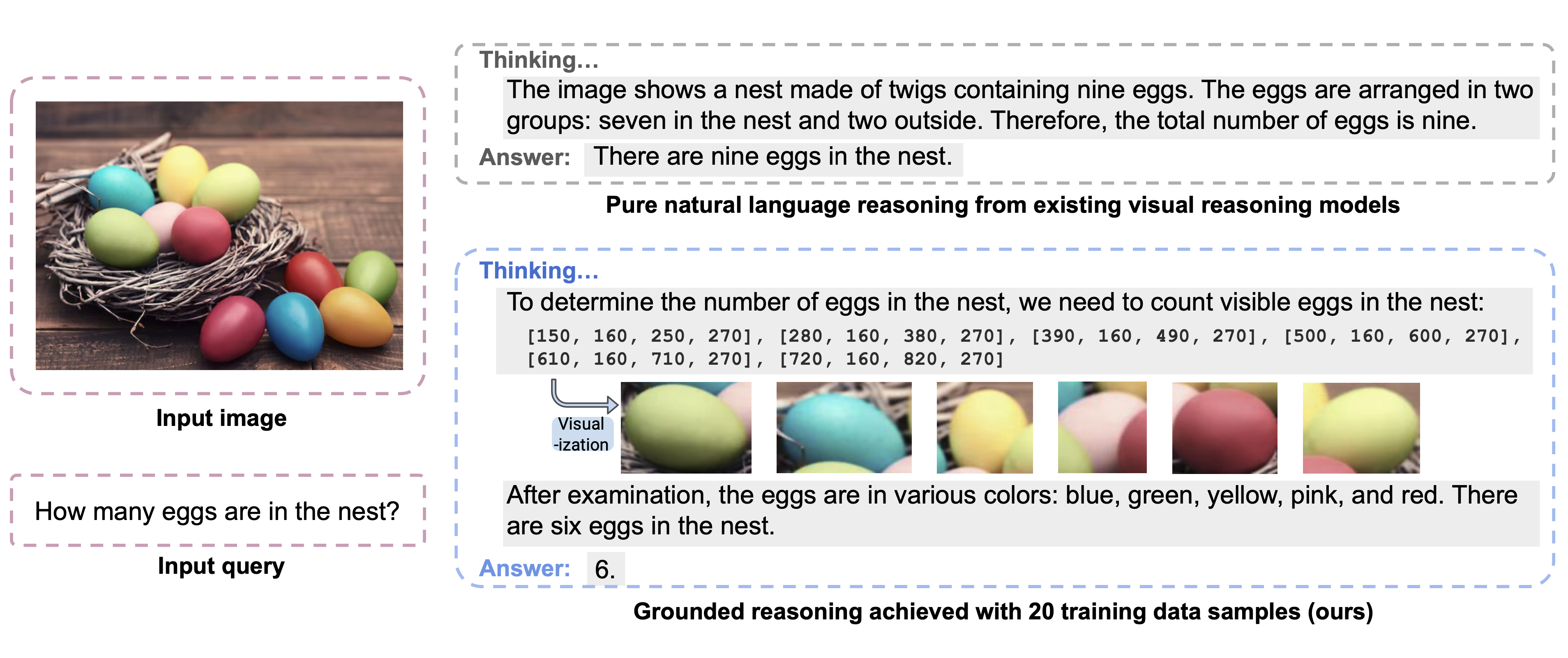

Recent studies have demonstrated the efficacy of using Reinforcement Learning (RL) in building reasoning models that articulate chains of thoughts prior to producing final answers. However, despite ongoing advances that aim at enabling reasoning for vision-language tasks, existing open-source visual reasoning models typically generate reasoning content with pure natural language, lacking explicit integration of visual information. This limits their ability to produce clearly articulated and visually grounded reasoning chains. To this end, we propose Reasoning with Images and Texts (GRIT), a novel method for training MLLMs to think with images. GRIT introduces a grounded reasoning paradigm, in which models generate reasoning chains that interleave natural language and explicit bounding box coordinates. These coordinates point to regions of the input image that the model consults during its reasoning process. Additionally, GRIT is equipped with a reinforcement learning approach, GRPO-GR, built upon the GRPO algorithm. GRPO-GR employs robust rewards focused on the final answer accuracy and format of the grounded reasoning output, which eliminates the need for data with reasoning chain annotations or explicit bounding box labels. As a result, GRIT achieves exceptional data efficiency, requiring as few as 20 image-question-answer triplets from existing datasets. Comprehensive evaluations demonstrate that GRIT effectively trains MLLMs to produce coherent and visually grounded reasoning chains, showing a successful unification of reasoning and grounding abilities.

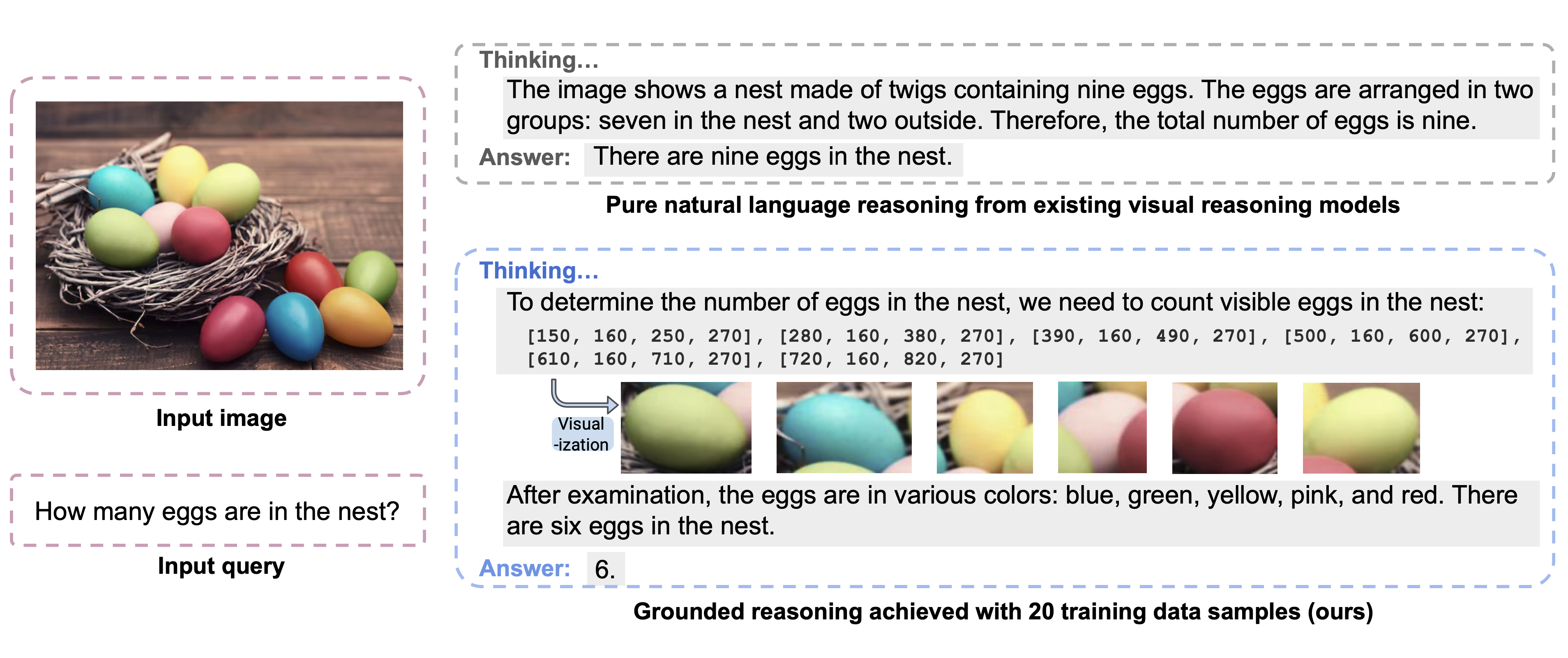

GRIT employs GRPO-GR, a reinforcement learning approach that optimizes a policy πθ to generate grounded reasoning sequences with three key reward components:

1. Grounded-reasoning-format reward (rformat): Encourages proper use of reasoning structure-related special tokens and valid bounding box syntax

2. Grounded-target-counting reward (rcount): For counting tasks, verifies the number of bounding boxes matches the ground-truth count

3. GPT-aided answer-accuracy reward (rans): Combines GPT-4o evaluation of answer correctness with BLEU similarity to ground truth

This approach eliminates the need for explicit reasoning annotations or bounding box labels, achieving remarkable data efficiency with as few as 20 training examples while maintaining strong performance.

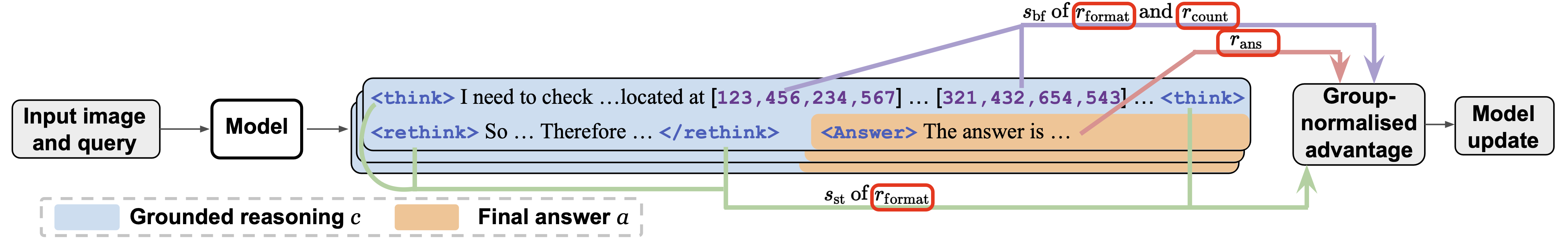

Directly using the GRIT method with GRPO-GR reinforcement learning, we train two pre-trained MLLMs, Qwen2.5-VL-3B and InternVL-3-2B, with only 20 image-question-answer triplets from existing datasets, VSR and TallyQA. Comprehensive evaluations demonstrate that GRIT-trained models overall outperform baselines across six testing sets Datasets .

Metrics:

Results confirm that GRIT effectively unifies previously separated grounding and reasoning capabilities in MLLMs efficiently and effectively, achieving strong performance across diverse visual reasoning tasks.

Question: How many zebras are pictured here?

Model output:

Ground truth answer: 7

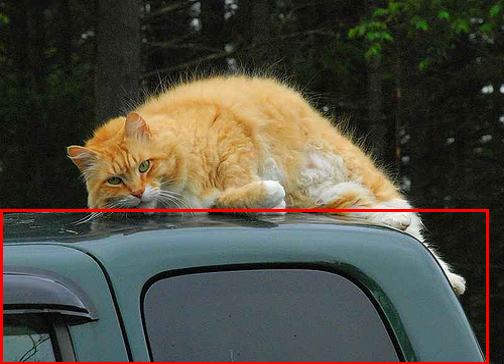

Question: Is the truck beneath the cat?

Model output:

Ground truth answer: Yes

Question: Is there a knife in the image?

Model output:

Ground truth answer: No

Inference examples of Qwen2.5-VL-GRIT.

@misc{fan2025gritteachingmllmsthink,

title={GRIT: Teaching MLLMs to Think with Images},

author={Yue Fan and Xuehai He and Diji Yang and Kaizhi Zheng and Ching-Chen Kuo and Yuting Zheng and Sravana Jyothi Narayanaraju and Xinze Guan and Xin Eric Wang},

year={2025},

eprint={2505.15879},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.15879},

}